Reverse Engineering the Sierra Adventure Game Interpreter

I find the process of reverse engineering classic game engines and architectures to be a fascinating topic. It’s particularly interesting to me when I find a game that I played growing up and discover the innovative ideas that early game developers had to come up with to overcome the hardware limitations of early PCs, as well as the completely different architectures they were targeting. For example, Fabien Sanglard did an excellent review of Another World and how it used a virtual machine - back when that was a very novel idea. I remember playing that game and being blown away by the graphics and gameplay and trying to understand how games like these were designed.

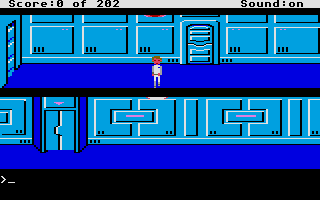

One of the earliest games I played was Space Quest I (SQ1). I was quite young at the time and since I was both learning to read and not a native English speaker it was a slow process, but I remember being completely fascinated by the magic of computers - it could honestly have been one of the triggers for becoming a software engineer. Even though this genre of games is around 40 years old, there is still quite a lot of interest in these games and niche communities of software-minded folks who reverse engineer the game engines are re-implement the designs in modern languages. The engine behind SQ1 - the Sierra Adventure Game Interpreter - even has it’s own community-driven wiki!

I came across an article which explores parsing the WORDS.TOK file using Objective C. I have vague recollections of trying to open this file in a binary editor but being disappointed that I couldn’t understand any of it. The community-driven documentation gives a brief description of the format of this file - it’s incredible to think that this was all reverse-engineered from the original source code.

I decided to try and implement a parser for this file based on the wiki description - it’s a fun way to dip your toes into reverse engineering without trying to re-implement an entire game engine. (This is very similar to the Wolfenstein 3D Fizzlefade Algorithm exploration I did a few years ago.)

Here is the wiki documentation:

The words.tok file is used to store the games vocabulary, i.e. the dictionary of words that the interpreter understands. These words are stored along with a word number which is used by the said test commands as argument values for that command. Many words can have the same word number which basically means that these words are synonyms for each other as far as the game is concerned.

The file itself is both packed and encrypted. Words are stored in alphabetic order which is required for the compression method to work.

As I learnt later on, the file is not actually encrypted - or at least not in the version I was testing against - but it is packed. The documentation doesn’t really explain in what way it’s packed - maybe it’s a very common or obvious pattern - but I didn’t understand it at first.

The first section

At the start of the file is a section that is always 26x2 bytes long. This section contains a two byte entry for every letter of the alphabet. It is essentially an index which gives the starting location of the words beginning with the corresponding letter.Byte Meaning ----- ----------------------------------------------------------- 0-1 Hi and then Lo byte for 'A' offset ... 50-51 Hi and then Lo byte for 'Z' offset 52 Words section ----- -----------------------------------------------------------The important thing to note from the above is that the 16 bit words are big-endian (HI-LO). The little endian (LO-HI) byte order convention used everywhere else in the AGI system is not used here. For example, 0x00 and 0x24 means 0x0024, not 0x2400. Big endian words are used later on for word numbers as well.

All offsets are taken from the beginning of the file. If no words start with a particular letter, then the offset in that field will be 0x0000.

The words section

Words are stored in a compressed way in which each word will use part of the previous word as a starting point for itself. For example,forearmandforestboth have the prefixfore. Ifforestcomes immediately afterforearm, then the data forforestwill specify that it will start with the first four characters of the previous word. Whether this method is used for further confusion for would be cheaters or whether it is to help in the searching process, I don’t yet know, but it most certainly isn’t purely for compression since the words.tok file is usally quite small and no attempt is made to compress any of the larger files (before AGI version 3 that is).Byte Meaning ----- ----------------------------------------------------------- 0 Number of characters to include from start of prevous word 1 Char 1 (xor 0x7F gives the ASCII code for the character) 2 Char 2 ... n Last char n+1 Wordnum (LO-HI) -- see below ----- -----------------------------------------------------------If a word does not use any part of the previous word, then the prefix field is equal to zero. This will always be the case for the first word starting with a new letter. There is nothing to indicate where the words starting with one letter finish and the next set starts, infact the words section is just one continuous chain of words conforming to the above format. The index section mentioned earlier is not needed to read the words in which suggests that the whole words.tok format is organised to find words quickly.

The documentation gave me a rough mental model of the file - the first 52 bytes contains some sort of index into the file itself, followed by all the words themselves. There is the complication that there is compression applied, which logically makes sense, as well as the word number which groups words which are considered synonyms. This roughly tracks with my memory of the game where look room and look around would be interchangeable - you often had to figure out what word the game would accept for an object on screen.

I started off trying to implement a first version of the parser, but quickly ran into problems - the documentation doesn’t really say what offset means (is that in bytes?) or how to determine when a word ends. I quickly switched from trying to follow the documentation to reverse-engineering some of the reverse-engineering solutions in Pascal and Objective C. My initial solution looked like this:

module WordsParser

BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT = "n"

UNSIGNED_EIGHT_BIT = "C"

def self.parse_words(file_path)

dictionary = {}

previous_word, current_word = "", ""

File.open(file_path, "rb") do |file|

file.seek(1, IO::SEEK_SET)

initial_position = file.readbyte

file.seek(initial_position, IO::SEEK_SET)

loop do

previous_word = current_word

current_word = ""

byte = file.read(1)

break if file.eof?

prefix_length = byte.unpack1(UNSIGNED_EIGHT_BIT)

current_word = previous_word[0, prefix_length]

loop do

byte = file.read(1)

break if file.eof?

value = byte.unpack1(UNSIGNED_EIGHT_BIT)

if value < 32

current_word << (value ^ 0x7F).chr

elsif value == 95

current_word << " "

elsif value > 127

current_word << ((value - 128) ^ 0x7F).chr

break

else

# Ignore

end

end

word_number_bytes = file.read(2)

break unless word_number_bytes

word_number = word_number_bytes.unpack1(BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT)

dictionary[word_number] ||= []

dictionary[word_number] << current_word

end

end

dictionary

end

endI tested my solution against the WORDS.TOK file from King’s Quest 1 - which apparently you can just download now, I guess the copyright has long since expired? It was fun to see the actual words being parsed, but I really didn’t understand the solution - and it didn’t quite match up against what was written in the wiki (which isn’t all that surprising - since it’s completely community-driven and based on an understanding of the reverse-engineered source code).

I started with this snippet:

value = byte.unpack1(UNSIGNED_EIGHT_BIT)

if value < 32

current_word << (value ^ 0x7F).chr

elsif value == 95

current_word << " "

elsif value > 127

current_word << ((value - 128) ^ 0x7F).chr

break

else

# Ignore

endI started off by seeing why 95 is special, why can’t we handle that one the same as values under 32? (Also, what’s special about 32?)

irb(main):001> (95 ^ 0x7F).chr

=> " "So there is nothing special, we can still do the XOR operation in that case. Then I checked to see what is being ignored (and why are there characters in the file that we should ignore?), so I did some printf debugging:

# Ignore

puts "Ignoring '#{(value ^ 0x7F).chr}', current_word: #{current_word}"That gave me one ignored character:

Ignoring '-', current_word: fourThis seems to be a bug in the community implementations that I’ve seen - ignoring the dash is probably incorrect here. The JSON output from my script included this:

"74": [

"clover",

"four leaf clover",

"fourleaf clover",

"fourleaf clover"

],The point of the dash was to allow players typing in four-leaf clover, so if you only allow characters under 32 (and spaces) then you actually introduce a game bug. I fixed this issue which added the dash in the dictionary correctly:

"74": [

"clover",

"four leaf clover",

"four-leaf clover",

"fourleaf clover"

],This gave me some confidence that I’m on the right track. I did some reading on how packing works and the algorithm started to make sense - the game developers basically wanted to write a bunch of words into a file, but in order to save space you consider the actual ASCII code of each character.

irb(main):003> ('a'..'z').map(&:ord)

=> [97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122]You can XOR these values with 0x7F (01111111 in binary) to transpose the ASCII codes into a lower range, which helps to flip the characters into a more compressible range (when used with other compression techniques).

('a'..'z').map(&:ord).map { |ord| ord ^ 0x7F }

=> [30, 29, 28, 27, 26, 25, 24, 23, 22, 21, 20, 19, 18, 17, 16, 15, 14, 13, 12, 11, 10, 9, 8, 7, 6, 5]This still doesn’t really explain so many of the solutions only consider characters under 32 (why not 30 or less?), but I suspect that might just be a bug. Now for the check on values higher than 127 - since you’re not using the most significant bit you can set that bit to indicate that the character is the last character in the word. Now the code makes more sense - and it can be simplified.

def parse_word(file)

word = String.new

loop do

break unless (byte = file.read(1))

value = byte.unpack1(UNSIGNED_EIGHT_BIT)

if value > 127

word << ((value - 128) ^ 0x7F).chr

break

else

word << (value ^ 0x7F).chr

end

end

word

endNow for the index part - the wiki says that the file starts with an index of 26 * 2 = 52 bytes. I assume that the original idea was that you could look up the words starting with a given letter by loading the offset from the index, seeking to that offset, and then parsing the words. The part that didn’t make sense to me was how most of the implementations I looked at all loaded the offset at byte 1 (not 0?), seeking to that offset, and then parsing from there.

My mental model said that the first two bytes should contain the offset for the letter a, but you should be able to skip over the first 52 bytes and start parsing the words. I tested by idea by trying to parse the index itself and printing the letters and offsets:

def parse_index(file_path)

File.open(file_path, 'rb') do |file|

('a'..'z').each_with_object({}) do |letter, index|

index[letter] = file.read(2).unpack1(BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT)

end

end

end{

"a": 51,

"b": 194,

"c": 468,

"d": 760,

"e": 865,

"f": 925,

"g": 1187,

"h": 1399,

"i": 1484,

"j": 1539,

"k": 1555,

"l": 1645,

"m": 1838,

"n": 1951,

"o": 2023,

"p": 2096,

"q": 2316,

"r": 2328,

"s": 2470,

"t": 2841,

"u": 3035,

"v": 3065,

"w": 3084,

"x": 0,

"y": 3260,

"z": 0

}This is exactly what I expected to see - for letters that don’t have any words in the dictionary the offset is 0. Since a usually does have words in the dictionary (and the first byte in that offset is 0), doing file.seek with the offset at byte 1 works, but it’s not accurate. If you want to find the words starting with a you should parse the first two bytes as an offset and seek to that location in the file. In order to parse the words you should file.seek(51, IO::SEEK_SET) to skip over the index. Note that we’re doing 51, not 52, so that the next byte you read will be the first byte of the first word.

I did some more minor cleanups (removing unnecessary guard clauses and variables) to get to a pretty clean solution:

module WordsParser

INDEX_SIZE = 26 * 2

BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT = 'n'

UNSIGNED_EIGHT_BIT = 'C'

class << self

def parse_words(file_path)

dictionary = {}

current_word = ''

File.open(file_path, 'rb') do |file|

file.seek(INDEX_SIZE - 1, IO::SEEK_SET)

loop do

prefix_length = file.read(1).unpack1(UNSIGNED_EIGHT_BIT)

current_word = current_word[0, prefix_length]

current_word << parse_word(file)

break if current_word.empty?

word_number = file.read(2).unpack1(BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT)

dictionary[word_number] ||= []

dictionary[word_number] << current_word

end

end

dictionary

end

def parse_index(file_path)

File.open(file_path, 'rb') do |file|

('a'..'z').each_with_object({}) do |letter, index|

index[letter] = file.read(2).unpack1(BIG_ENDIAN_UNSIGNED_SIXTEEN_BIT)

end

end

end

private

def parse_word(file)

word = String.new

loop do

break unless (byte = file.read(1))

value = byte.unpack1(UNSIGNED_EIGHT_BIT)

if value > 127

word << ((value - 128) ^ 0x7F).chr

break

else

word << (value ^ 0x7F).chr

end

end

word

end

end

endThe code is available on Github. If you’re interested in exploring other legacy game engines, I would recommend you check out:

- Fabien Sanglard’s Website

- Masters of Doom: How Two Guys Created an Empire and Transformed Pop Culture

- Game Engine Black Book: Wolfenstein 3D

- Game Engine Black Book: DOOM

- The Making of Prince of Persia: Journals 1985-1993

Any that I’m missing? Leave a comment.