Why FIFA.com crashed

If you’re soccer fan like me (the World Cup is only 2 weeks away!) you probably encountered considerable frustration in the past week. This is due to FIFA ticketing system. FIFA allow fans to buy tickets through the FIFA.com website (which generally works pretty well), but in the past few weeks there have been a number of outages leading to some seriously annoyed customers. This culminated on Friday – FIFA had announced that 150 000 extra tickets would go on sale by 9 AM, but their website simply couldn’t handle the extra load and 24 hours later the problem still hadn’t been resolved.

I decided to have a look at the FIFA.com website to try and determine the cause for these problems. I obviously have no idea about the server infrastructure or failover setup, but I can examine the website itself and see if the cause is simply due to the enormous load or if it possibly could have been avoided. The following is simply ideas I would have tried to implement if I was in charge of the FIFA.com site. There is obviously no way to know whether or not this would have avoided the problems encountered.

DISCLAIMER: I don’t consider myself a web expert. If I make any glaring mistakes in my analysis, please enlighten me – I’m still learning as I go along.

The Homepage

To get started, let’s take a look at the World Cup Homepage. In this screenshot I actually zoomed out as far as Chrome would allow (and there’s still more content cut-off from the bottom of the page). See that red line in the middle of the picture? That’s the ‘fold’ – to see anything below that line I would need to scroll down. I would argue that there’s simply too much content on the Homepage. Since this page will be hit by every single user that’s going to the website, I would keep the content here to a minimum and perhaps move all news articles to a dedicated news section. Obviously it’s difficult to find a balance between optimization and content, but I would rather serve less content than have my entire site be unavailable for a day.

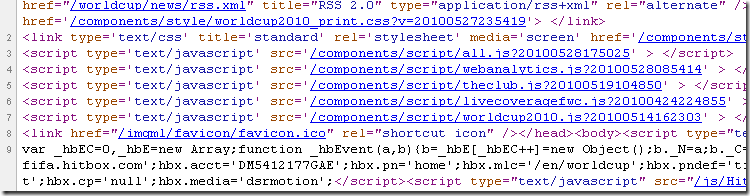

Ok, so let’s have a look at the source. First things first – the page being served has a .html extension. This is good, it means the server is serving a static page and doesn’t have to execute any code to serve the page. If you’re trying to optimize your site for high traffic volumes, you can’t really do any better than static HTML.

The Domain

Let’s take a look at where the images are being served from – here is an example: http://img.fifa.com/imgml/worldcup/head/logoFIFA.gif. This is great – images are being hosted on a different domain. This is important for 2 reasons – firstly, the HTTP/1.1 specification suggests that browsers load no more than 2 components in parallel per domain. If you serve content from multiple domains you can get more than 2 downloads to occur in parallel. The second reason is that the second domain is cookie-less (I verified this with Fiddler) – this means that when the browser makes a request for images it doesn’t need to send any cookies along with the request. This obviously means that content can be retrieved faster. My only issue here is that JavaScript and CSS is not being served from the second domain.

The HTML itself

Let’s take a look at the HTML. The first thing I looked at is the amount of whitespace – obviously whitespace is not going to make a massive difference to the speed of your site, but it’s something that’s incredibly easy to remove (there are probably 100 different ways to remove whitespace with IIS). There does appear to be relatively little whitespace in the page itself, but some of it still remains.

A few lines from the top the first real problems appear.

My first complaint here is that the JavaScript includes are done at the top of the page. As a rule of thumb CSS includes should be done at the top of the page and JavaScript includes at the bottom of the page. While browsers usually fetch multiple resources in parallel, this does not apply to scripts – while a script is downloading the browser won’t start any other downloads, even on different hostnames.

The second issue here is the use of multiple Style Sheets and JavaScript files. For each separate file the browser will need to make an additional request which our server will need to handle. So instead of the browser having to make 1 request for the Style Sheet and 1 for the JavaScript library, we’re making 2 calls for the Style Sheet and 6 for the JavaScript libraries. To optimize the site I would suggest that all Style Sheets and JavaScript files be combined into single files.

The third issue here is the use of inline JavaScript. Browsers will cache resources like images, Style Sheets and JavaScript files, but the browser cannot cache inline JavaScript. If you’re looking to optimize your website you can’t make mistakes like this.

Lastly, the HTTP specification suggests that browser should not cache URL’s with query strings. This means that 7 of the 10 resources in this snippet will not be cached by the browser. In reality IE and FireFox do actually cache URL’s with query strings, but this is mainly due to so many sites not following the specification. (Apparently Opera and Safari don’t cache URL’s with query strings) We should always develop against the specification, not against what the browser does – this is how we got into this IE6 hell in the first place.

The JavaScript

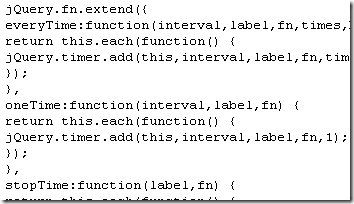

If you’re trying to streamline your JavaScript hosting the first thing you will probably do is to minify your JavaScript libraries. This simply means all the whitespace and newlines are removed from your libraries – this simply reduces the size of the files you need to send to the browser. For example, compare the jQuery library against one of the FIFA.com libraries.

(The one on the right is one of the FIFA.com libraries) Again, this might be a small issue, but it all adds up and it’s so incredibly easy to fix.

The Server-Side coding

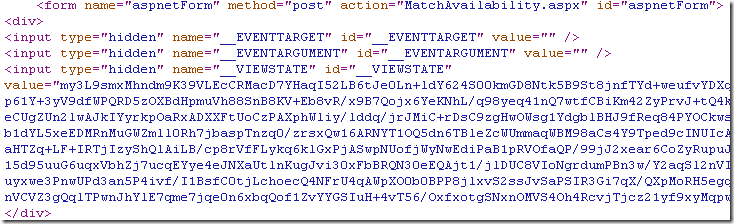

As I’ve already mentioned, I obviously have no idea about the quality of code on the server. However, we can pretty easily determine which framework is being used on the server. Take a look at the following snippet.

Yup, they’re using WebForms. I don’t want to get into an argument of WebForms vs MVC, but I really don’t think WebForms is the best solution in this case. The overhead of ViewState is simply not going to scale well enough to handle the amount of traffic you’re expecting on a site like this. You can try and throw hardware at the problem, but in the end your site is going to be slow and possibly crash completely when high traffic loads hit your site.

HTTP Compression

Http compression is something that I’ve only recently been learning about. When the browser makes the request it will add a Transport header to indicate which types of compression it understands. The server can then use one of these compression methods to compress the data it’s sending to the client. The most common HTTP compression methods are GZIP and deflate. This is something else FIFA.com did get right – if I make a request with Chrome the content is served using GZIP compression.

Conclusion

It’s obviously impossible to know whether or not any of the issues I mentioned here was actually responsible for the site’s downtime. In the best case scenario it might have prevented the crash, in worst case scenario it would make the site faster and reduce bandwidth costs. Keep in mind there are a whole host of other tools you can use to reduce the load on your server, these are only the main ones.